When accuracy doesn't help

An introduction to precision, recall, and f1-score metrics to measure a machine learning model's performance.

I once built a machine learning model that predicted with 99.99% accuracy whether a person would die in a car crash on any given day.

My solution was straightforward; I built a model that consistently predicted the person wouldn't die. That's it.

Without getting into the math, your odds of dying in a car crash every time you get behind the wheel are roughly 1 in 7,000,000 or 0.00000014%. So, yes, my model was very accurate!

The problem with accuracy

The car crash example is one of those imbalanced problems where we are interested in detecting an outcome that represents the overwhelming minority of all the samples: Most people will not die in a car crash, so my model, while very accurate, is useless.

This type of problem is widespread. For instance, detecting fraudulent credit card transactions, rare diseases, or selecting spam from legitimate emails are also imbalanced problems.

In general, any time we are working with an imbalanced problem where the negative cases disproportionally outnumber the positive samples, accuracy is not a helpful metric for assessing the performance of a machine learning model.

Recall

We usually want to maximize the model's ability to detect specific outcomes, and we can measure that using a metric called "recall." This metric represents the model's ability to identify all relevant samples in a problem.

To compute the model's recall, we can divide the positive instances detected by the model by the total number of existing positive samples in the dataset. A more formal way to think about this is by breaking these into separate concepts:

The "true positive" (TP) samples are those positive instances the model detected. The "false negative" (FN) samples are those positive instances the model missed. The total number of positive samples is the sum of "true positive" and "false negative" samples.

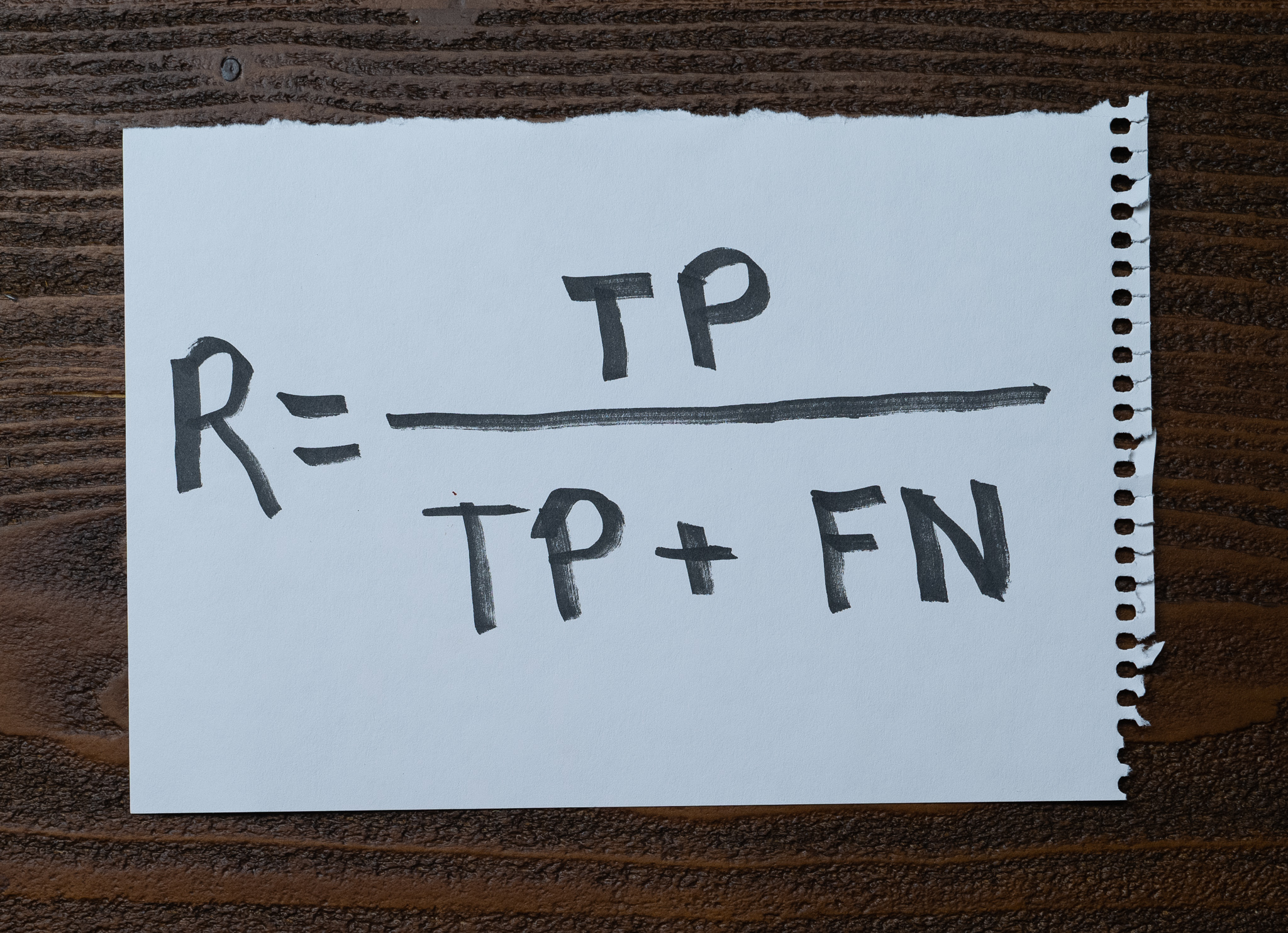

Therefore, we can define the model's recall as follows:

Remember the dumb model I built to predict people dying in car crashes? The model always predicts that you won't die, which is the negative case. I can only get true and false negatives from the model, but I'll never get any positive samples, which means the model's recall will be zero. Computing the recall makes it clear that my model is not practical.

Precision

The model's recall gives us a good representation of the model's ability to identify relevant outcomes. A model with high recall tells us that it can find most of the positive samples in the dataset, which is excellent.

But what happens if I change my model and always return a positive result? Wouldn't that make the recall of the model perfect?

It would, but now I'll be misclassifying every negative sample. Although the model's recall will be high, its "precision" will be very low.

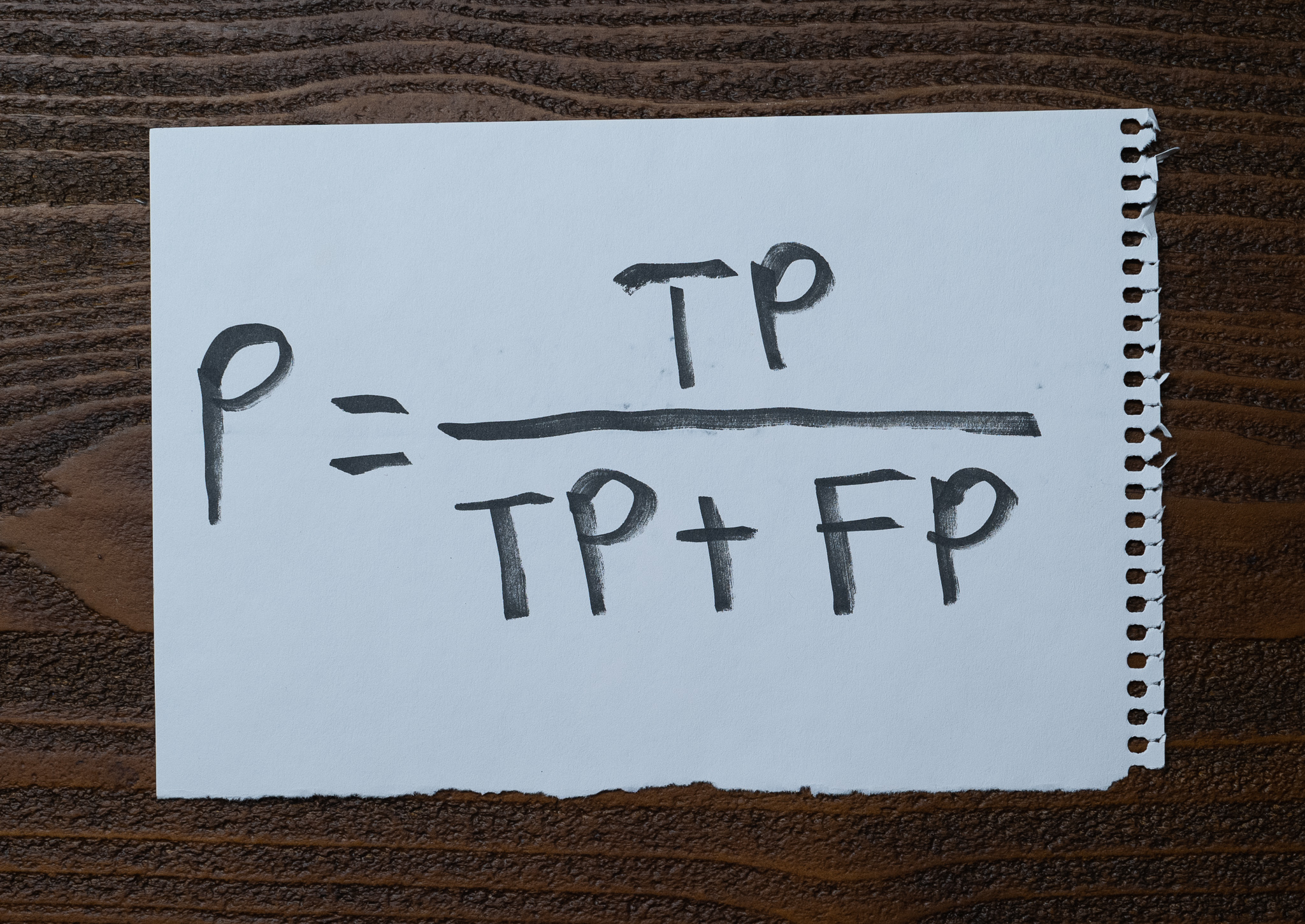

The precision of a model is the other metric that goes hand in hand with the model's recall. Precision measures the ability of a model to identify only relevant samples. We can compute it by dividing the positive instances detected by the model by the total number of cases marked as positive by the model, regardless of whether they are correct. More formally, we need the following terms:

The "true positive" (TP) samples are the positive instances that a model detected. The "false positive" (FP) samples are the negative instances that the model incorrectly classified as positive. The total number of cases the model marked as positive is the sum of "true positive" and "false positive" samples.

Therefore, we can define the model's precision as follows:

This time, although artificially changing my model to have a high recall may look like a good idea, its precision will indicate that the model is still not good.

Finding a balance

Imagine I make a few more changes to my model to focus on detecting a few specific positive samples. The model won't notice any instances that don't fit the mold but at least will catch some and, therefore, be very precise. How would that affect the model's recall?

As we make changes that increase the precision of a model, we will affect its recall. Conversely, as we increase the model's recall, we will reduce its precision. There's a tradeoff between these metrics, which is why we use them together to understand the model's performance better.

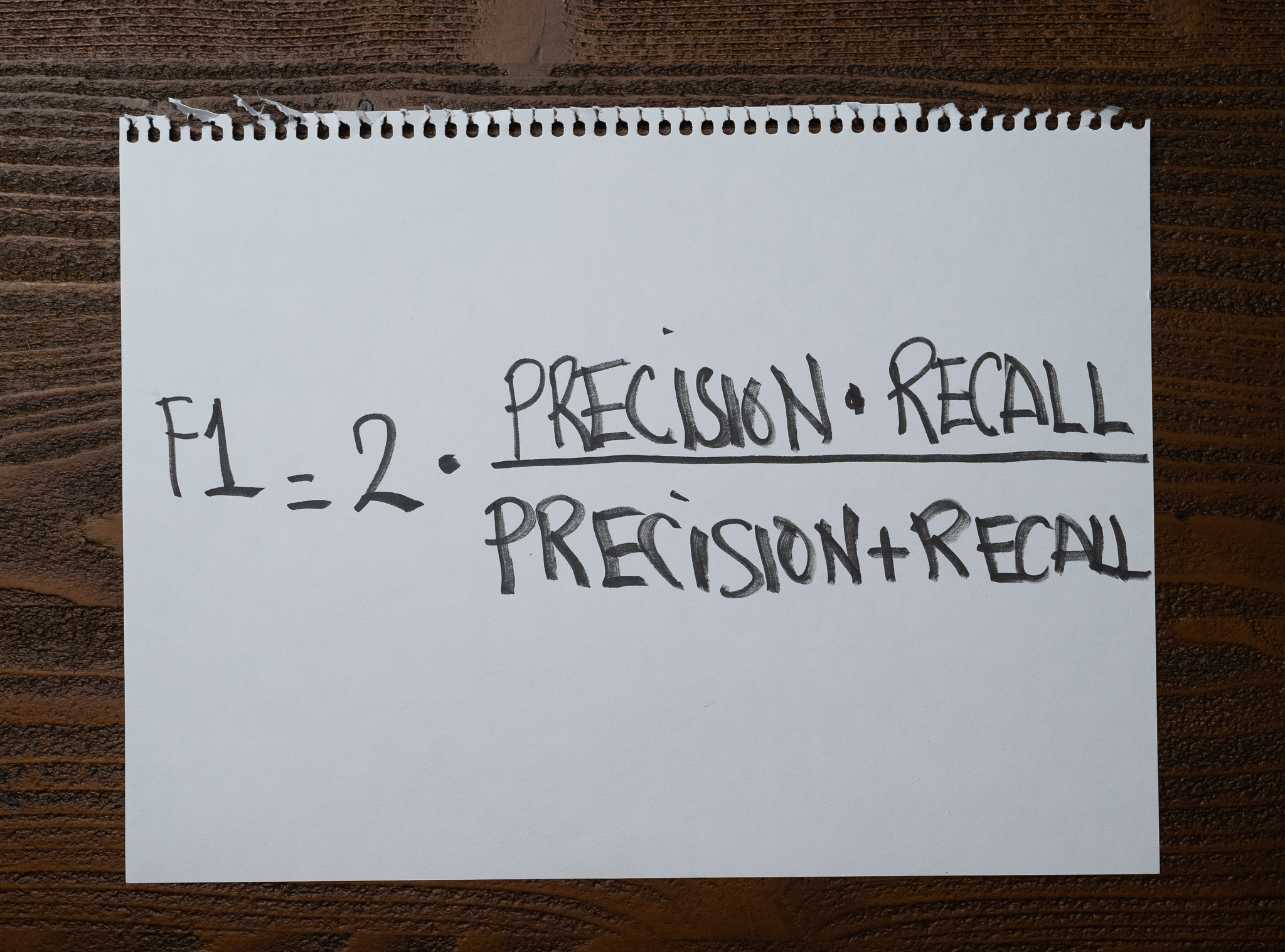

Fortunately, we have a third metric that encapsulates the balance between precision and recall. We call it the f1-score:

The f1-score is the harmonic mean between precision and recall. It gives equal weight to both metrics while punishing any model variation that prioritizes either without regard for the other. F1-score is a version of the more general Fβ-score metric, where we can adjust the value of β to give more weight to either precision or recall.

Using the f1-score, we can compute the performance of a model and ensure its recall and precision are in perfect balance.

Summary

When working on an imbalanced problem, accuracy will not be a helpful metric to assess the model's performance. Precision and recall will help us close the gap, and we can use the f1-score to balance both metrics in a single value.

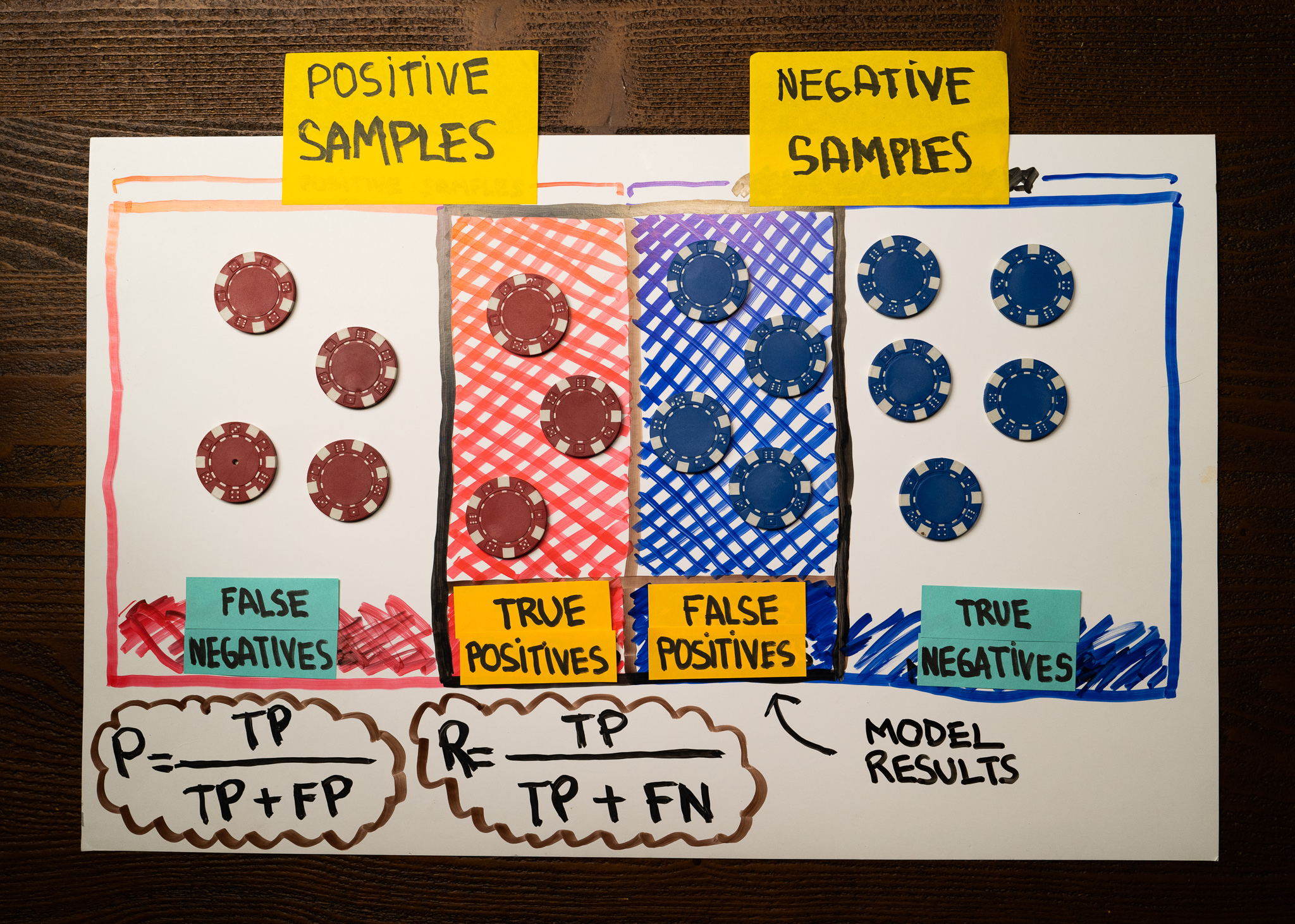

Here is a chart that summarizes how we can look at the results of a model to compute the precision and recall. The red instances are positive outcomes, while the blue ones are the negative instances:

Notice how we can surface the true positive, false positive, true negative, and false negative values from this illustration. A confusion matrix is another way to visualize the model results and get immediate access to a similar breakdown.

Latest articles

- The wrong batch size is all it takes. How different batch sizes influence the training process of neural networks using gradient descent.

- Overfitting and Underfitting with Learning Curves. An introduction to two fundamental concepts in machine learning through the lens of learning curves.

- Confusion Matrix. One of the simplest and most popular tools to analyze the performance of a classification model.